IT has two principal functions: create a network that satisfies the business needs of the company and then optimize that network. Unfortunately, the modern enterprise is buried under a myriad of network improvement projects that often do not get deployed.

One example is Cisco's NetFlow protocol, also referred to as IPFIX (which is the IETF's standardized version of NetFlow version 10).

NetFlow provides summarized network data that provides many benefits, like:

■ The ability to see network bottlenecks

■ Observe denial of service (DOS) attacks

■ Determine where to deploy quality of service (QOS) improvements

■ Manage resource utilization of network components

Flow data can be sent to performance monitoring and SIEM tools to help better manage the network. Some solutions use NetFlow data to optimize network QoS and user experience. Performance trending data can also be created to improve network management and monitoring functions.

While this technology has been available for years, many enterprises are reluctant to fully deploy it. This is for good reason. Turning on the NetFlow feature can increase CPU load by 10% or more. In addition, NetFlow must be programmed manually using a command line interface (CLI). NetFlow also has to be enabled across all of the network switches to get the full benefit. Therefore, the performance degradation and risk associated with this technology has been a barrier to deployment.

To activate NetFlow, each Layer 2 and Layer 3 network routing switch needs to have it turned on or proper statistics will not be captured. Each switch then acts as an event generator and sends data to a NetFlow collector for event correlation and interpretation. When activated, full NetFlow generation creates a significant load on the routing switches. One way around this is to deploy sampling, where the data flow is sampled only at certain intervals instead of sending a complete stream.

To create this network requires significant effort. In addition to programming each switch initially, there is more effort to update the switch programming on an ongoing basis as the network and NetFlow requirements change. This is especially bothersome if you have more than twenty switches in your network. Since each switch is an active component of the network, these programming changes are normally done during the maintenance window and typically require Change Board approval (which may only convene once per month). The reason for Change Board approval is because a mistake during the CLI programming process could inadvertently take the switch out of service, causing service disruption.

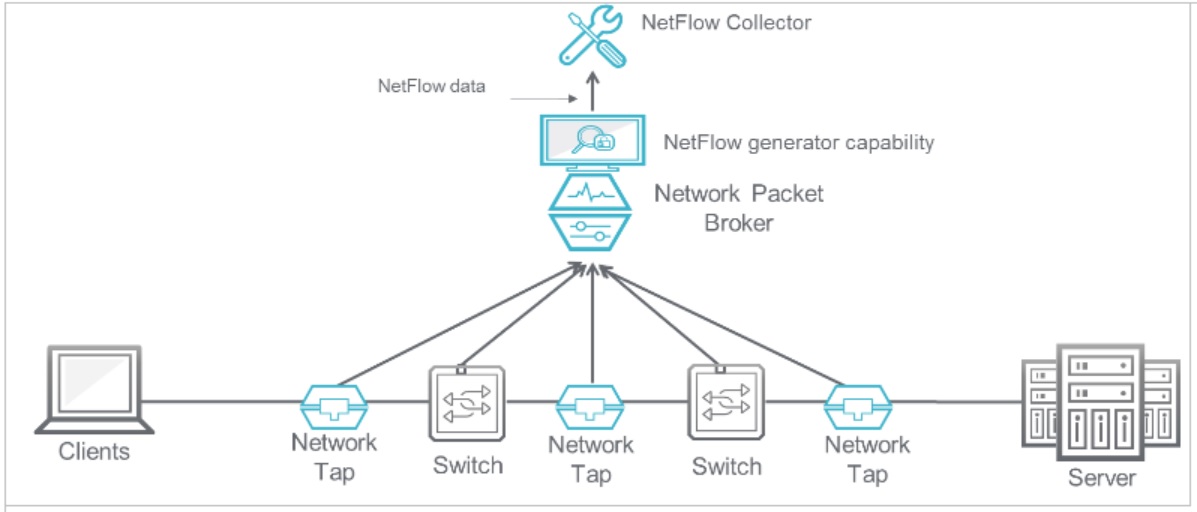

To eliminate service disruption risk, taps and a network packet broker (NPB) can be installed into the network. The use of taps are a one-time disruption to the network and are very safe, as they are passive devices. Once a tap is installed, an NPB can be connected to it without causing any service disruption.

A good NPB has built-in software that can be set up to generate NetFlow data from the network switches without sampling (i.e. at full line rate) and pass that data on to a collector, which is typically a performance monitoring tool. The NPB should also have the ability to deduplicate multiple versions of the same data. This will decrease the volume NetFlow generation, thereby decreasing the amount of NetFlow data generated and passed across the network.

In addition, some NPB vendors support add-on NetFlow data features such as: application type(s) in use identification (FTP, HTTP, HTTPS, Netflix, Hulu, etc.), geolocation of flow data, device type, browser type, and autonomous system information. This type of meta data gives you actionable information that allows you to optimize your network.

The following diagram illustrates an NPB serving as a NetFlow generator.

While NetFlow is a free feature on Cisco Layer 2 and 3 routing switches, use of the feature can create significant costs. When programming NetFlow directly on network switches, numerous parameters must be set up including: NetFlow feature enablement, the creation of each specific flow needed, the direction of flow capture, and where the data will be exported to. This all requires CLI for the programming interface.

A good NPB will use a graphical user interface (GUI) instead of CLI. The GUI is simple and intuitive to use. No heavy programming is needed, it is all drag and drop. In addition, no maintenance windows or Change Board approvals are needed, so changes can be made whenever needed and implemented immediately. This can dramatically reduce troubleshooting times and maintain a higher level of network performance. It is also especially important if changes are required for security related issues and data collection of suspicious network activity.

Without maintenance window slowdowns and the addition of a significantly faster programming interface, costs can be reduced. This is especially true when the CPU impacts to NetFlow programming on the Layer 2 and 3 routing switches are considered. Offloading the 10 to 20% performance hit to the NPB (which can process NetFlow at line rate without slowdowns) will also reduce cost and increase switch utilization for processing network traffic.

In the end, NetFlow is a great network switch feature to help with optimization. However, the feature must be turned on to be of benefit and the ongoing cost and effort to use it must also be addressed.