Very often, when an enterprise starts on the virtual desktop journey, the focus is on the user desktop. This is only natural - after all, it is the desktop that is moving - from being on a physical system to a virtual machine.

Therefore, once a decision to try out VDI is made, the primary focus is to benchmark the performance of physical desktops, model their usage, predict the virtualized user experience and based on the results, determine which desktops can be virtualized and which can't. This is what many people refer to as “VDI assessment”.

One of the fundamental changes with VDI is that the desktops no longer have dedicated resources. They share the resources of the physical machine on which they are hosted and they may even be using a common storage subsystem.

While resource sharing provides several benefits, it also introduces new complications. A single malfunctioning desktop can take so much resources that it impacts the performance of all the other desktops. Whereas in the physical world, the impact of a failure or a slowdown was minimal (if a physical desktop failed, it would impact only one user), the impact of failure or slowdown in the virtual world is much more severe (one failure can impact hundreds of desktops). Therefore, even in the VDI assessment phase, it is important to take performance considerations into account.

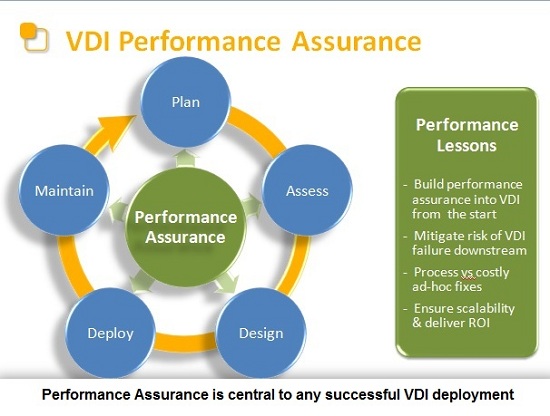

In fact, performance has to be considered at every stage of the VDI lifecycle because it is fundamental to the success or failure of the VDI rollout. The new types of inter-desktop dependencies that exist in VDI have to be accounted for at every stage.

For example, in many of the early VDI deployments, administrators found that when they just migrated the physical desktops to VDI, backups or antivirus software became a problem. These software components were scheduled to run at the same time on all the desktops. When the desktops were physical, it didn’t matter, because each desktop had dedicated hardware. With VDI, the synchronized demand for resources from all the desktops severely impacted the performance of the virtual desktops. This was not something that was anticipated because the focus of most designs and plans was on the individual desktops.

Understanding the performance requirements of desktops may also help plan the virtual desktop infrastructure more efficiently. For example, known heavy CPU using desktop users can be load balanced across servers. Likewise, by planning to assign a good mix of CPU intensive and memory intensive user desktops are assigned to a physical server, it is possible to get optimal usage of the existing hardware resources.

Lessons Learned

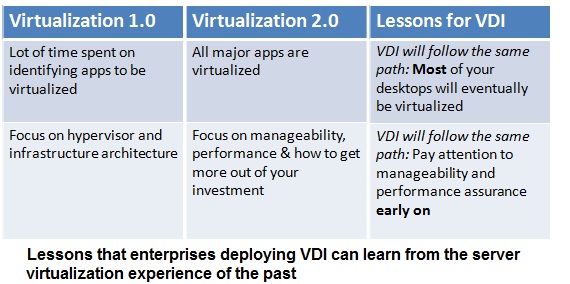

Taking this discussion one step further, it is interesting to draw a parallel with how server virtualization evolved and to see what lessons we can learn as far as VDI is concerned.

A lot of the emphasis in the early days was on determining which applications could be virtualized and which ones could not. Today, server virtualization technology has evolved to a point where there are more virtual machines being deployed in a year than physical machines, and almost every application server (except very old legacy ones) are virtualized fairly well. You no longer hear anyone asking whether this application server can be virtualized or not. From focusing on the hypervisor, virtualization vendors have realized that performance and manageability are key to the success of server virtualization deployments.

VDI deployments could be done more rapidly and more successfully if we learn our lessons from how server virtualization evolved. VDI assessment needs to expand in focus on just the desktop and look at the entire infrastructure. Attention during VDI rollouts has to be paid to performance management and assurance. To avoid a lot of rework and problem remediation down the line, performance assurance must be considered early on in the process and at every stage. This is key to getting VDI deployed on a bigger scale and faster, with great return on investment (ROI).