By embracing End-User-Experience (EUE) measurements as a key vehicle for demonstrating productivity, you build trust with your constituents in a very tangible way. The translation of IT metrics into business meaning (value) is what APM is all about.

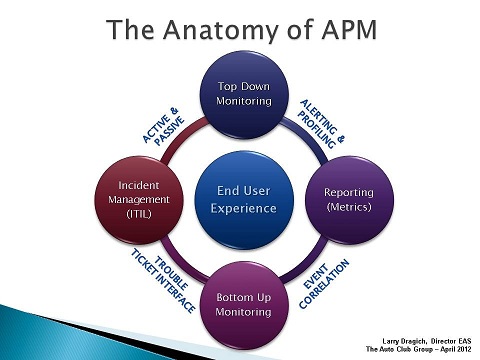

The goal here is to simplify a complicated technology space by walking through a high-level view within each core element. I’m suggesting that the success factors in APM adoption center around the EUE and the integration touch points with the Incident Management process.

When looking at APM at 20,000 feet, four foundational elements come into view:

- Top Down Monitoring (RUM)

- Bottom Up Monitoring (Infrastructure)

- Incident Management Process (ITIL)

- Reporting (Metrics)

Top Down Monitoring

Top Down Monitoring is also referred to as Real-time Application Monitoring that focuses on the End-User-Experience. It has two has two components, Passive and Active. Passive monitoring is usually an agentless appliance which leverages network port mirroring. This low risk implementation provides one of the highest values within APM in terms of application visibility for the business.

Active monitoring, on the other hand, consists of synthetic probes and web robots which help report on system availability and predefined business transactions. This is a good complement when used with passive monitoring to help provide visibility on application health during off peak hours when transaction volume is low.

Bottom Up Monitoring

Bottom Up Monitoring is also referred to as Infrastructure Monitoring which usually ties into an operations manager tool and becomes the central collection point where event correlation happens. Minimally, at this level up/down monitoring should be in place for all nodes/servers within the environment. System automation is the key component to the timeliness and accuracy of incidents being created through the Trouble Ticket Interface.

Incident Management Process

The Incident Management Process as defined in ITIL is a foundational pillar to support Application Performance Management (APM). In our situation, Incident Management, Problem Management, and Change Management processes were already established in the culture for a year prior to us beginning to implement the APM strategies.

A look into ITIL's Continual Service Improvement (CSI) model and the benefits of Application Performance Management indicates they are both focused on improvement, with APM defining toolsets that tie together specific processes in Service Design, Service Transition, and Service Operation.

Reporting Metrics

Capturing the raw data for analysis is essential for an APM strategy to be successful. It is important to arrive at a common set of metrics that you will collect and then standardize on a common view on how to present the real-time performance data.

Your best bet: Alert on the Averages and Profile with Percentiles. Use 5 minute averages for real-time performance alerting, and percentiles for overall application profiling and Service Level Management.

Conclusion

As you go deeper in your exploration of APM and begin sifting through the technical dogma (e.g. transaction tagging, script injection, application profiling, stitching engines, etc.) for key decision points, take a step back and ask yourself why you're doing this in the first place: To translate IT metrics into an End-User-Experience that provides value back to the business.

If you have questions on the approach and what you should focus on first with APM, see Prioritizing Gartner's APM Model for insight on some best practices from the field.

You can contact Larry on LinkedIn

Larry Dragich of AAA Joins The BSM Blog

For a high-level view of a much broader technology space refer to slide show on BrightTALK.com which describes “The Anatomy of APM - webcast” in more context.

The Latest

Organizations are continuing to embrace multicloud environments and cloud-native architectures to enable rapid transformation and deliver secure innovation. However, despite the speed, scale, and agility enabled by these modern cloud ecosystems, organizations are struggling to manage the explosion of data they create, according to The state of observability 2024: Overcoming complexity through AI-driven analytics and automation strategies, a report from Dynatrace ...

Organizations recognize the value of observability, but only 10% of them are actually practicing full observability of their applications and infrastructure. This is among the key findings from the recently completed Logz.io 2024 Observability Pulse Survey and Report ...

Businesses must adopt a comprehensive Internet Performance Monitoring (IPM) strategy, says Enterprise Management Associates (EMA), a leading IT analyst research firm. This strategy is crucial to bridge the significant observability gap within today's complex IT infrastructures. The recommendation is particularly timely, given that 99% of enterprises are expanding their use of the Internet as a primary connectivity conduit while facing challenges due to the inefficiency of multiple, disjointed monitoring tools, according to Modern Enterprises Must Boost Observability with Internet Performance Monitoring, a new report from EMA and Catchpoint ...

Choosing the right approach is critical with cloud monitoring in hybrid environments. Otherwise, you may drive up costs with features you don’t need and risk diminishing the visibility of your on-premises IT ...

Consumers ranked the marketing strategies and missteps that most significantly impact brand trust, which 73% say is their biggest motivator to share first-party data, according to The Rules of the Marketing Game, a 2023 report from Pantheon ...

Digital experience monitoring is the practice of monitoring and analyzing the complete digital user journey of your applications, websites, APIs, and other digital services. It involves tracking the performance of your web application from the perspective of the end user, providing detailed insights on user experience, app performance, and customer satisfaction ...

Enterprises are experiencing a 13% year-over-year increase in customer-facing incidents, reflecting rising levels of complexity and risk as businesses drive operational transformation at scale, according to the 2024 State of Digital Operations study from PagerDuty ...

According to Grafana Labs' 2024 Observability Survey, it doesn't matter what industry a company is in or the number of employees they have, the truth is: the more mature their observability practices are, the more time and money they save. From AI to OpenTelemetry — here are four key takeaways from this year's report ...

In an age where technology evolves at a breakneck pace, it's crucial to explore how AI assistants can revolutionize our work processes and daily lives, ultimately enhancing overall performance ...