Black Friday may be behind us for another year, but Free Shipping Day is just around the corner.

On the 18th of December, nearly 1,000 online stores will offer free shipping for last minute holiday shoppers.

If you're one of these 1,000 retailers, no doubt you're rigorously stress testing your sites and apps to ensure they will run smoothly on the big day.

But is your testing enough to guarantee a smooth shopping day for your customers? Huge retailers like Best Buy, Tesco, Argos, John Lewis and Currys all had costly crashes on Black Friday 2014, despite extensive preparations and testing. On top of that, Free Shipping Day is likely to bring new, specific challenges for your site — and it's getting bigger every year.

Will Free Shipping Day be bigger than Black Friday? 2014 marks the 7th year for Free Shipping Day, and each year is bigger than the last. Since 2012, spending on Free Shipping Day has exceeded $1 billion and it has overtaken Black Friday's online spending for two years in a row. In fact, just two years after it was created, Free Shipping Day made its mark as the third heaviest day for online shopping in US history.

So there's a good chance that your website will be under even more stress this Thursday than Black Friday. The good news is that you can learn from mistakes made by others to ensure your website will be able to handle the load. Here are three lessons that we can learn this year:

Lesson #1. Don't Underestimate the Load

Both Tesco and Best Buy underestimated the huge surge of traffic that came to their sites on Black Friday. Tesco reported having five times more customers than 2013 and Best Buy witnessed "record levels of website traffic".

There are many reasons why traffic might be higher than you expected, such as the rising popularity of Free Shipping Day, the increasingly widespread use of online shopping and even the fact that you might be getting traffic from your competitors if their websites are down.

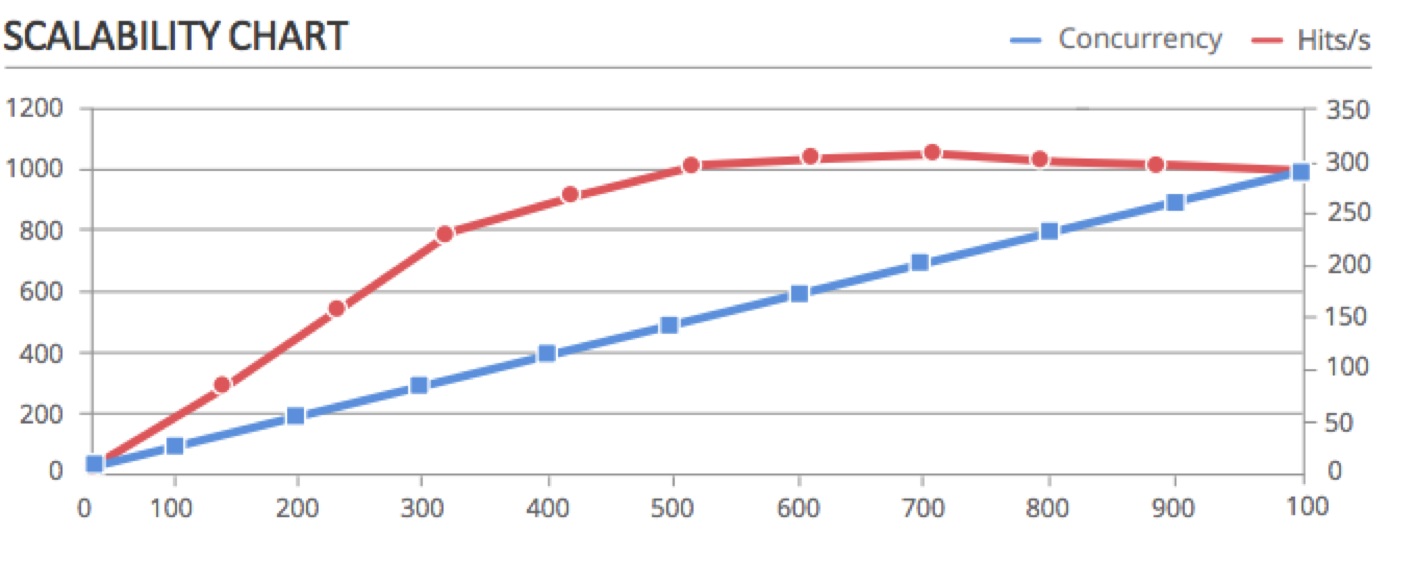

That's why it is important to try to bring your system into failure when running your load testing. Whether your failure will be caused by CPU, memory, connection pools, a network band issue or something else, you need to find it. I recommend running a sequence of tests while continually increasing the load and monitoring your hits/s throughput as you do this. Keep on doing this until you hit a scaling problem.

For example: the chart below shows where your system reaches its capacity (in this case after 300 virtual users).

Lesson #2: Recover Quickly from Technical Problems

On Black Friday, Tesco's website was down for 12 hours – half of the busiest shopping day of the year.

Every minute that your website is down, you're losing hundreds of thousands of dollars in lost revenue. So, whether a crash is caused by high traffic loads or a power outage, it's vital to put plans in place to ensure you'll recover quickly.

I recommend ensuring you have back-up servers and locations ready so you can recover quickly if there's a problem. Set up a database replication, database failover cluster or application failover cluster. If there's a problem, just switch over to the failover location. You won't have to wait for your main server to recover as your backup can be running while you resolve the critical issues.

Lesson #3: Run Load Tests from Different Devices in the Production Environment

Shortly after Best Buy's Black Friday crash, a company spokesman gave the following statement: "A concentrated spike in mobile traffic triggered issues that led us to shut down BestBuy.com in order to take proactive measures to restore full performance.”

Your traffic load won't come in equal measures from all devices – or locations for that matter. The vast majority of your traffic might come from one type of device and one specific region. That's why it's crucial to run your tests in different geo-locations and from different devices on your live production site. This is the best way to ensure that your testing is accurate, that your test plan is well organized and that you're stressing every point in the entire chain of delivery. I recommend testing in your production environment at a time when traffic is very low (such as 3am on a Sunday morning) and informing your customers that there may be some downtime during these hours.

There are various ways that you can run such tests. You can use an open source load testing tool and buy several Virtual Private Servers (VPS) in different geo-locations to test your web or app servers under heavy load from various geographical locations. Alternatively, you can use cloud performance testing tools to simulate the load from various devices and multiple geo-locations.

Specific Challenges of Free Shipping Day

In addition to learning from all of the above mistakes, it's also important to address the specific challenges that Free Shipping Day will bring.

For 24 hours only, developers need to ensure that the checkout tallies won't include shipping costs. Some companies will have a flexible framework in place that enables them to just click a checkbox to exclude shipping fees. However, most companies won't have such a framework in place. Instead, they will have software that needs adapting. In such cases, many developers will make ad-hoc changes to a few lines of code to remove the shipping fees for these key 24 hours.

However, this type of “quick fix” can lead to technical glitches or even incorrect pricing calculations. If the fix isn't implemented correctly or the developers fail to update every page that includes the shipping fees, your customers may see additional costs on pages that you overlooked.

In addition to these functional issues, all of the code changes might lead to unforeseen problems with the performance of your website or app.

To avoid such issues, it is critical to avoid making changes to the code at the last minute. You need to ensure that you have enough time and the right environment to check the repercussions of any changes that are made.

Once the changes have been made, take the time to check the functionality in the staging or QA environment. It's worth asking your QA team to ensure that it is operating as anticipated. Once the change has been verified from a functional perspective, then you need to check that it works on a high scale. To do this, it's best to run a load test in the production environment around four to five hours before Free Shipping Day officially begins. This will give you enough time to make any last minute fixes that you need before your customers start flooding your site.

If you take all of these considerations into account, I'm confident that Free Shipping Day 2014 will be successful and profitable for you and your company.

Alon Girmonsky is Founder and CEO of BlazeMeter.