Start with: The CMDB of the Future - Part 1

Automating SOME of the Service Model Definition

The first step in making any system more automatic (and thus more reliable) is to dramatically reduce the amount of manual maintenance required. It is also essential that the methodology is deterministic and not heuristic (and prone to subtle errors). Let's use a concrete example to explore ways this can be done.

Consider a Java application that is built on a standard message-oriented integration platform. The application itself might be dependent on a JMS server and a few specific message queues, as well as an Oracle database, a Tomcat server, and several JVMs, all running on a couple of VMWARE Virtual Machines (VMs).

Using a strictly manual approach, one would list each individual component on which the application is dependent, and in doing so build the service model or CMDB table.

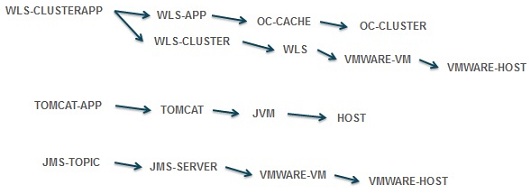

However, we can be smarter and automate much of the CMDB definition process if we take advantage of known dependencies between data types, as shown in the diagram to the right. For example, a TOMCAT-APP runs within a TOMCAT server which is a JVM that runs on a specific HOST. Similar logic could be applied to JMS Topics, WebLogic Applications, and many other component types.

By applying these rules, it is possible to specify just the name of the JMS-TOPIC and the URL of the TOMCAT-APP, and all the related components of the application can be derived automatically. With just two entries in the CMDB it is possible to deterministically describe the service model for this application ... and subsequently aggregate the important metrics for each component to calculate and present a measure of the health state of that application, as well as alert on exceptional conditions.

This technique can be even more effective if the identifier for each top-level component can be readily associated with the application that uses it. For example, if a queue associated with the "Inventory" application is named INVENTORY.QUEUE.1 and the one used by "Order Processing" is ORDERS.QUEUE.1, it is easy to parse the names and automatically populate the CMDB service model with the proper dependencies as well as dynamically update it with zero manual intervention.

In working with dozens of larger organizations, SL Corporation has seen greater adoption of these techniques and best practices to achieve more reliability and automation in monitoring critical applications. But there is big change in the IT landscape that influences CMDB evolution in an entirely different way.

Automating ALL of the Service Model Definition

The introduction of virtualization technology has spawned a significant revolution in computing, somewhat akin to the transition from discrete semiconductor components of the 1950s to the era of fully integrated circuits. What used to take hundreds of individual components wired together on a circuit board could now be embedded in a single chip and stamped out in huge volumes. The integrated circuits of today routinely contain tens or hundreds of thousands of components on a single chip.

Though still in the early stages, something similar has happened with virtualization and software as a service. In an instant you can provision a virtual machine with a complete operating system of your choice (Infrastructure as a Service). You can also include middleware components like a full-featured Application Server (Platform as a Service). With custom application software, we are not as far along ... you still need to wire lots of virtualized components together to make a complex integrated application.

It is easy to see the obvious benefits of virtualization for providing fast and cheaper access to computing power. But not everyone recognizes that there is a more subtle, yet powerful, force at work here ... one that can significantly enhance the ability to automate and make deterministic the monitoring of the health state of complex applications. It used to be that IT would order hardware and after it arrived would manually configure the operating system and other platform software required by application developers. Not so any more.

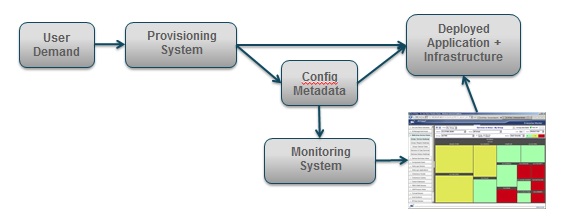

With virtualization, the provisioning of a new "platform" is completely data-driven. This means that configuration information about the physical location, IP address, service names and ports for all components is maintained in a file or database table (referred to as metadata) and is used to deploy the requested components.

This deployment metadata can do "double duty" and be used to configure a comprehensive monitoring solution to accompany each application. Ideally, every component of a complex application, from infrastructure to middleware to custom processes, and all their interdependencies will be identified in the metadata, providing precisely the functionality that has eluded developers of the current generation of CMDB.

Eventually, this "CMDB of the Future" will be one and the same as the deployment metadata used in provisioning systems. At a minimum it would be generated automatically. There is already a well-known CMDB data definition consisting of a repository of Configuration Items (CIs) and the relationships between them. Up to now it has been difficult to construct a dependable CMDB, whether manually or heuristically. However, by automatically deriving it from provisioning metadata, we could finally see emerge a completely deterministic and reliable CMDB used to map the health state of underlying components to the business services that are dependent on them.

Clearly, we are quite a ways from this goal, but progress may be just ahead. Sometimes the most significant advances are not the latest and greatest that you read about in the trade press but instead are the ones going on quietly behind the scenes and without a lot of fanfare. I suspect that the next generation Configuration Management Database may be just such a thing.

ABOUT Tom Lubinski

Tom Lubinski is President and CEO, and Board Chairman, of SL Corporation, which he founded in 1983. Lubinski has been instrumental in developing SL's Graphical Modeling System Software (SL-GMS) and the more recent RTView software. Prior to starting SL Corporation, he attended the California Institute of Technology and developed a substantial consulting practice specializing in Object-Oriented Programming and Graphical Visualization Systems. He has more than 30 years of experience in the development of computer hardware systems and software applications.