Do you watch basketball?

I'm a big basketball fan. I love it!

And what sports fan doesn't have a team they root for? My team? It's the National Basketball Association's New York Knicks. I love my Knicks.

But for a bunch of years now, they haven't been loving me back with all the losing they've been doing.

And with the new season that started, nothing seems to have changed … yet. We're still losing.

Despite that, I always want to know what's going on with my team. So I'm always on their website at nyknicks.com to see what the latest news is.

What's happening with Carmelo Anthony? Or Kristaps Porzingis?

Are Derrick Rose and Joakim Noah gelling with their new teammates?

What do people think of the job the new coach is doing?

But just like watching the team play, being on the Knicks website isn't always a pleasant experience. It can be slow.

Always one to investigate, I wanted to see why the Knicks site is so slow sometimes.

But how do you do that?

Enter WebPageTest

WebPageTest is an open source tool that can be used to measure and analyze the performance of web pages. Using both the public online instance or your own private instance that you can download, you can test the performance of your website or web application to see where improvements can be made.

Or in my case, your team's website.

While there's nothing I can necessarily do about the Knicks site directly, you can do something for your websites and web applications.

Google the Scrutinizer

With the increased scrutiny on web performance by Google, companies with B2C and SaaS B2B models must ensure that their websites and web applications are fast.

It's widely accepted, partially based on Jakob Nielsen's book, Usability Engineering, that the time it takes a web page to load should be something closer to 1 second. It's at this time that users begin to notice if an interface is slow.

Case studies from Google, Amazon, Akamai and others have shown that users start thinking about going elsewhere if your site's performance is consistently slower than that. Most are gone by the time 10 seconds comes around. If your site or application relies on users buying what you're selling, those seconds can mean the difference between a visitor buying from you or your competitor.

The Knicks are a NBA team, and so their business model is largely B2C with fans and other types of consumers visiting their site. People like me are on their site all the time to get the latest information about the team, and possibly buy game tickets or player jerseys.

Obviously, there's every incentive to make their site fast for me and other Knicks fans out there.

Let's Go Knicks … Site Testing

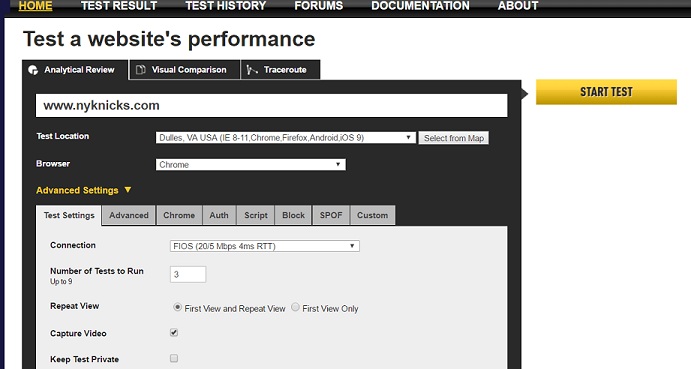

So I fire up webpagetest.org, and I input the Knicks website address.

My Internet connection is only DSL. So for my initial test, I wanted to reduce the impact of lower bandwidth. To do that, I picked a FIOS connection with a 20Mbps download speed instead.

I also wanted to run multiple tests. When doing such testing, running only one test can lead to anomalous results. Luckily, with multiple tests, WebPageTest provides the median results of all the test that you run.

The Key Metrics to Success

To improve the speed of your site or application, whether on your internal or external network, you must focus on three key metrics that will help lead you in the right direction to plan for or solve performance issues. These metrics are total number of requests, total amount of data transferred, and response time.

I'm focusing on these metrics as I analyze the slowness of the Knicks website.

Follow along with me in this 3-part blog series, so you can do the same for your site or web app too.

Let's start with the first metric ...

Metric #1: Number of HTTP Requests

In HTTP, each click of the browser generates some kind of request from the client. That request can either go all the way back to the server, or it can be fulfilled by your local computer via its cache or even something in the middle, like WAN accelerator.

The HTTP protocol defines a number of different kinds of request methods. They include request types such as the GET, POST, DELETE, and CONNECT, among others.

The two most widely used however are GET and POST.

A GET request is used to do exactly what the name implies: to get data from a server. The POST request typically does the reverse of a GET – it posts or sends data to a server. However, the POST can also be used to get data from a server as well. This usually occurs for dynamic data that a web application may not want to be cached by the browser.

You Rang?

Each request generally requires a response of some sort, even if it's just to say "OK, I got it." So for each request that goes across the network to the server, you can expect a response that does the reverse toward the client.

And unless you're caching everything, you want to limit the number of requests going to and from the server across the network.

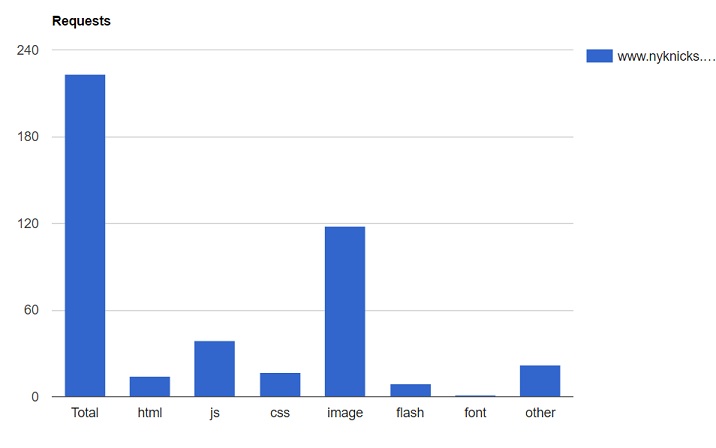

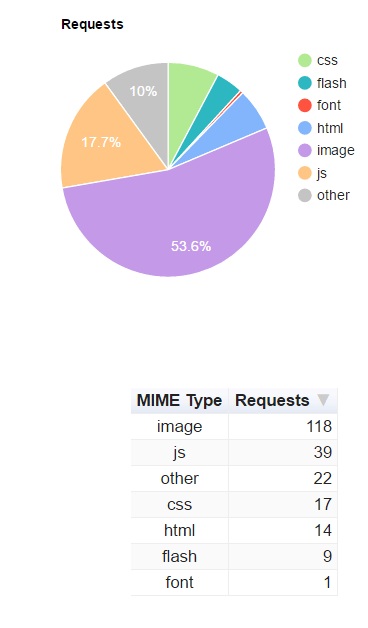

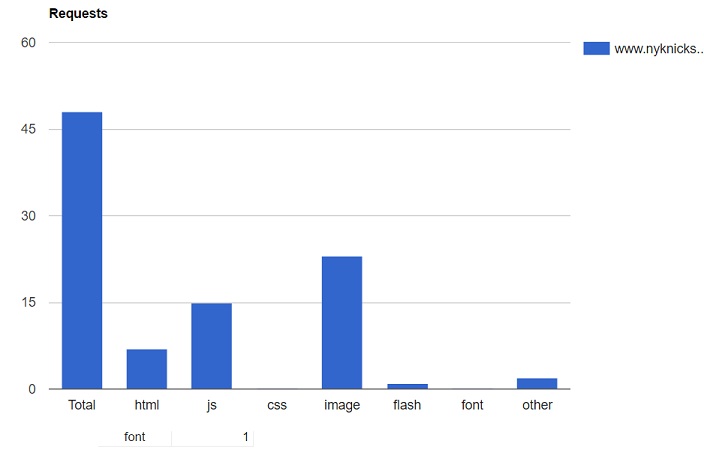

In my test of the Knicks website, I see that they have a LOT of requests.

Going to nyknicks.com ended up generating over 220 HTTP requests, most of which were GETs.

Many of these requests were to various domains used to display everything on the site, but 220 is a lot.

The HTTP protocol is inherently a chatty protocol. Separate requests are made for the smallest image and script files.

My test on the Knicks site created almost 120 images and 40 JavaScript requests. Each of these must not only traverse the network, but time must be taken to process them – by both the server and the client.

And when you include other domains – which is typical these days – you open yourself up to additional requests that add more time to process each of them, more time to open up each connection for these requests, and more time to traverse the network, etc.

So requests should be limited as much as possible.

But how do you limit them?

Cache Me If You Can

One of the ways to limit the number of requests is to just not send them to the server. GET requests are often able to be cached, especially if it's for a static file like a script. POST requests are most often not cacheable, but depending on the design of your website, you have the ability to cache certain information.

So instead of having to go across the network to get a resource, the client can simply check its cache for that resource, and save itself the roundtrip time.

For new users of your web application, this may not help a whole lot, but this will certainly benefit returning users.

In WebPageTest, I can run a number of tests both with an empty cache and with a primed cache. By doing that for the Knicks site, I can see that the number of requests were reduced significantly the second and third times the test was run.

After caching, the number of requests went from over 220 in the empty-cache test to just under 50 in the primed-cache test. That's a 77% decrease! Pretty good!

How nice would it be for the Knicks to get a 4x decrease in losses this season. That would put us in Golden State Warriors territory!

OK, maybe I'm dreaming. Back to the article!

So what about new users? How can you help them?

The Combine

Another way to reduce the number of requests is to limit the number of resources the client needs. This is especially useful for new users.

For example, if you have numerous JavaScript and CSS files the client needs to parse, each of these files will generate a request.

You can combine the JavaScript files into one or a couple of files, and have the client request just those couple files instead of the many. Something similar can be done for CSS files.

Because of HTTP's chatty nature, reducing the number of requests that a browser sends is the quickest way to reduce overall response time. You avoid penalizing the user by having their requests traverse the network unnecessarily.

Doing so can be complex depending on the design of your site or application, but your users and customers will be happy when they encounter a fast site the first time around, and even faster the next time they return.

Read 3 Key Metrics for Successful Web Application Performance - NBA Edition Part 2, where I discuss metric #2.