Metrics-oriented thinking is key to continuous improvement – and a core tenant of any agile or DevOps philosophy. Metrics are factual and once agreed upon, these facts are used to drive discussions and methods. They also allow for a collaborative effort to execute decisions that contribute towards business outcomes.

DevOps, although becoming a commonly used job title, is not a role or person and there is no playbook or rule set to follow. Instead, DevOps is a philosophy which spans people, process, and technology. The goal is releasing better software more rapidly, and keeping said software up and running by joining development and operational responsibilities together.

Additionally, DevOps aims to improve business outcomes, but there are challenges in selecting the right metrics and collecting the metric data. Continuous improvement requires continuous change, measurement, and iteration. What’s more, the agreed-upon metrics drive this cycle, but also create insights for the broader organization.

Data-Driven DevOps

A successful DevOps transformation focuses on a couple areas. To start, a culture change is needed between development and operations teams. Another core tenant of DevOps is measurement. In order to accomplish a true DevOps transformation, it’s important to measure the current situation and regularly review metrics which indicate improvement or degradation. One of the core tenants of DevOps is measurement, and using said measurements as facts when driving decision making. These metrics should span several areas which may have been considered disjointed in the past.

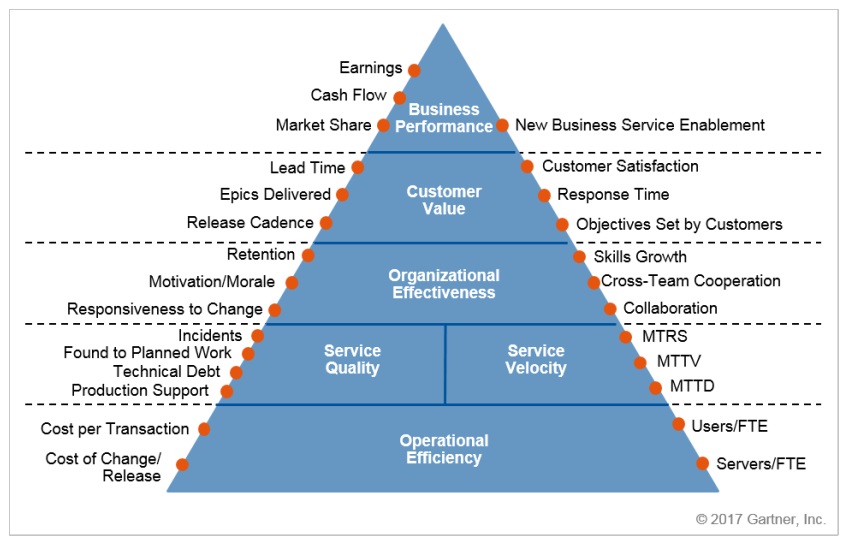

To help DevOps teams think of possible metrics and how these metrics relate to key initiatives, Gartner recently released this useful metrics pyramid for DevOps:

Many of these metrics span development, operations, and most importantly – the business. They measure efficiency, quality, and velocity. However, Gartner points out that the hardest part is often defining what we can collect, take action upon, audit, and use to drive a lifecycle.

The second challenge (which Gartner does not discuss) is how these metrics should be linked together to offer meaningful insights. If the metrics do not allow linkage between a release and business performance, attribution gaps remain. And unfortunately, many enterprises today analyze metrics that have a lack of linkage or relationship between them.

To help with these relationships, context is critical. Without context, metrics can be open to interpretation, especially as you move up the Gartner pyramid. So it’s crucial to be able to link metrics together and attribute earnings or cash flow with a release or change that represents improvements in the application.

Additionally, metrics should be able to drive visibility inside the application without creating an additional burden for developers. With automated instrumentation, metric data can be produced consistently and comprehensively across all teams. This is extremely beneficial as many teams have different ways of collecting data, which can traditionally lead to inconsistencies. Consistent measurements should always be obtained from the application components and desired business outcomes of the application.